Confidence interval: hypothesis testing

Refer to the Forest Plot sheet in the User Manual for details on how to run the analysis.

The workbooks and a pdf version of this guide can be downloaded from here.

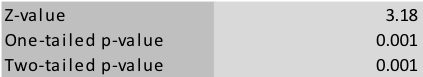

The confidence interval of the combined effect size in Figure 1 does not include zero, i.e., in case of a confidence level of 95% the p-value is smaller than .05. In traditional terminology, this means that the meta-analytic effect is statistically significant. If the aim of the meta-analysis is to test the hypothesis that there is an effect, then the null hypothesis can be rejected and the alternative hypothesis (that there is an effect) is deemed more likely in this example. The user can find the corresponding Z-value and p-values (one-tailed and two-tailed) in the column on the left side of the forest plot sheet in Meta-Essentials (see Figure 3).

Figure 3: Part of forest plot sheet in Meta-Essentials, with Z-value and p-values

The forest plot in Figure 1 shows that research results have been “contradictory” or “ambiguous”. Some studies have shown statistically significant positive effects. Other studies have shown statistically significant negative effects. And there have also been some studies with effects that are statistically non-significant. In standard practice, meta-analysis is aimed at “solving” the problem that results of studies differ and apparently contradict each other, by generating a “combined” effect. The combined effect might be significantly different from zero (or not). This type of result of a standard practice meta-analysis is considered very valuable because it clearly is a solution to the problem of “contradictory” evidence or, more often, to the problem of “insignificant results”. Because meta-analysis functions as a more powerful significance test it generates a more useful and more convincing result than a single study. For example, suppose that the forest plot in Figure 1 is a plot of the results of studies of the effects of a specific educational reform intervention. These studies have been conducted in different countries, in different contexts (e.g., in schools managed by the government and schools managed by non-governmental organisations), and with different types of students (e.g., different genders, different ages, different social backgrounds). The statistical significance of the combined effect might now be interpreted as evidence (or “statistical proof”) that this specific intervention “significantly” improves educational outcomes. This result, then, might be the basis of a policy decision to invest in this type of educational intervention.

However, a Z-value or p-value is not an effect size. A government or an education minister is not merely interested in the fact that there is a positive effect of an intervention but also in how large the effect is. Statistical significance loses its relevance when “samples” get very large. Because the pooled sample size in a meta-analysis is usually very large, the combined effect will almost certainly be significant, even if the combined effect size is very small. If not, a statistically significant combined effect can be generated by adding studies to the meta-analysis. If the combined effect is taken as the basis for a decision about implementing this intervention, its estimated effect size and its precision (or lack of it as indicated by the width of the confidence interval) should be interpreted. In this example, policy makers should decide whether an effect size of 0.20 (the lower bound of the confidence interval of the estimate of the combined effect) is large enough for deciding in favor of the intervention.

A serious problem with this approach is that none of the usual requirements for null hypothesis significance testing is met in a meta-analysis. The core requirement for a significance test (or for any form of inferential statistics in general) is that the effect is observed in a random (or probability) sample from a defined population (enabling a sampling frame). In meta-analysis, however, there is no population and there is no probability sample. The input of the meta-analysis is results of studies. These studies have generated estimates of effect sizes in populations, which are represented in the forest plot by point estimates and their confidence intervals. This means that the sample in a meta-analysis (if any) is a sample of populations and that the combined effect size (and its confidence interval) that is calculated in the meta-analysis is an inference about the effect in the population of these populations. This super-population might coincide with the domain of the study (as specified in assumption 3). However, it is obvious that the set of populations that have been studied (and of which the results have been entered in the meta-analysis) is not (and cannot be) a probability sample from that domain. Usually, a study is conducted in a sample (if a sample is drawn at all) from a population that is chosen by convenience. A sample for a study of an intervention, for instance, is normally drawn from a population to which the researcher happens to have access (e.g., the schools in a school district of which a gatekeeper happens to be a friend or former colleague of the researcher; or the schools in a district that happens to be managed by an innovative governor). Because a set of effect sizes in a meta-analysis is not a probability sample, null hypothesis significance testing is statistically not appropriate.

The fact that populations (or studies) cannot be treated as a probability sample is usually evident from the observed effect sizes themselves. In the forest plot in Figure 1, for instance, it is obvious that the difference between the results of studies 1-4 on the one hand, and of studies 5 and 6 on the other hand, cannot be explained by sampling variation. Studies 1-4 might have been studies of probability samples of one population, and studies 5 and 6 might have been studies of samples from one (other) population, but it is statistically not probable that these two populations were identical to each other. For instance, studies 1-4 might have been four field experiments in a small number of adjacent school districts in one country (say, the United Kingdom, coded AA on the input sheet), whereas studies 5 and 6 might have been two field experiments in another country (BB). When the pattern of the forest plot itself suggests that there are different types of population with rather different effect sizes (as in this example), then the “combined” effect size is not a useful parameter any more.

Estimating a combined effect in a subgroup might still be useful. In this (fictitious) example, it might be useful for policy makers to have an estimate of the combined effect size of an intervention in the United Kingdom (which will be close to 2.0, which might be an effect size that is large enough to recommend the intervention) and another one in another country (which will be negative and close to zero, from which might be concluded that funding of that specific intervention should not come from the education ministry in that country). This issue will be further discussed in the section on subgroup analysis.